Fighting LLM Manipulation and Misinformation in Healthcare

- Brandywine Consulting Partners

- Sep 15, 2025

- 5 min read

Prepared by: Brandywine Consulting Partners (brandywine.consulting)

The Increasing Threat of LLM Manipulation in Healthcare

Large Language Models (LLMs) like GPT-4, Claude 3, and Med-PaLM 2 are revolutionizing healthcare by automating patient communication, risk stratification, claims management, and compliance workflows. Yet, their very flexibility introduces significant vulnerabilities. Without robust safeguards, LLMs can be manipulated to generate misinformation, violating regulatory standards and endangering patient safety.

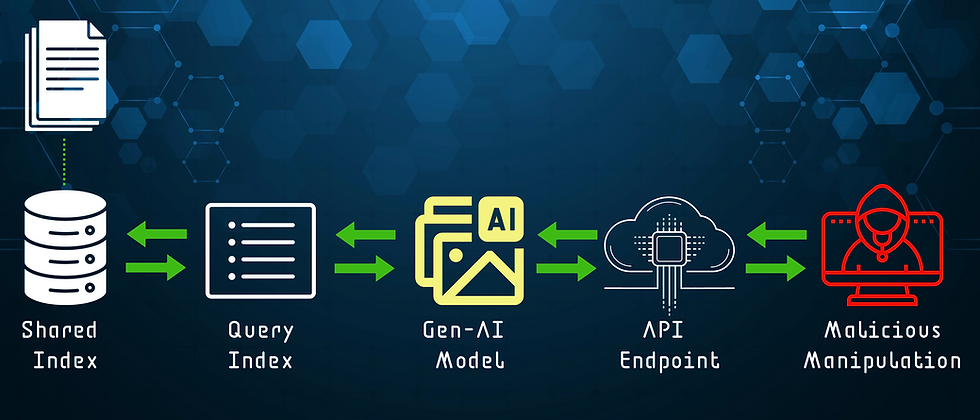

Manipulators exploit weaknesses through two key pathways: micro-manipulation and macro-manipulation.

Micro-Manipulation (Prompt-Level Attacks)

Prompt Injection: Attackers craft inputs that override safety guardrails, generating harmful or restricted content (e.g., incorrect medication instructions).

Direct Prompt Injection: Attackers enter malicious instructions, causing the language model to behave unintentionally or harmfully.

Indirect Prompt Injection: Attackers gradually affect the behavior of the AI system over time by injecting malicious prompts into web pages that the model consumes to subtly alter the context or history and influence future responses.

Stored Prompt Injection: Attackers embed malicious prompts into an AI system's training data or memory, affecting its output when it is accessed.

Rapid Leak: Attackers trick AI systems into unintentionally revealing sensitive information in their responses.

Data Exfiltration: Attackers create inputs to leak sensitive information to AI systems.

Data Poisoning: An attacker injects malicious prompts or data into a training dataset or during an interaction, it can skew the behavior and decisions of the AI system.

Jailbreaks: Cleverly worded prompts force the model to bypass safeguards by role-playing or indirect prompting.

Sycophancy Bias: LLMs “agree” with user assumptions, validating false beliefs.

Prompt injection attacks are rapidly emerging AI security threats that can lead to unauthorized access, intellectual property theft, and contextual misuse.

Macro-Manipulation (Training-Level and Ecosystem Attacks)

Training Data Poisoning: Research shows that poisoning as little as 0.001% of a model’s training data can bias outputs toward unsafe recommendations.

Information Flooding: Coordinated campaigns insert false health narratives into public data sources, which then seep into model training and retrieval.

Fine-Tune Tampering: Attackers compromise fine-tuning pipelines, altering model weights to subtly push harmful misinformation.

Evidence of Real-World Misinformation

Independent audits reveal severity of the problem:

NewsGuard’s AI False Claims Monitor found that the top 10 generative AI systems repeated known false claims 35% of the time in August 2025, up from 18% in 2024.

NewsGuard False Claim Fingerprints tracks thousands of verified false narratives, enabling initiative-taking detection before they reach production workflows.

Even advanced models like GPT-4 and Med-PaLM 2, which score 86–93% accuracy on medical benchmarks such as MedQA, show sharp performance drops when assessed on emerging health misinformation or adversarial prompts.

Key takeaway: Benchmark performance ≠ real-world safety. High accuracy on standardized tests does not protect against dynamic, manipulative misinformation.

Industry Moves to Minimize Manipulation

A number of key organizations are working to address the LLM misinformation crisis:

Organization | Methodology | Focus Area |

|---|---|---|

NewsGuard | False Claim Fingerprints for narrative detection and tracking | Health misinformation detection |

WHO | Governance frameworks for safe LLM deployment in healthcare | Risk management and ethical oversight |

ONC (US Dept. of Health & Human Services) | Algorithm transparency and data provenance rules | Regulatory compliance for decision-support systems |

OpenAI & Anthropic | Reinforcement Learning with Human Feedback (RLHF) + red-teaming | Guardrails for general-purpose models |

Google DeepMind (Med-PaLM) | Clinician-reviewed fine-tuned healthcare models | Clinical QA accuracy |

Brandywine Consulting Partners | Integrated with NewsGuard, WHO, and ONC for real-time misinformation filtering, retrieval governance, and continuous auditing | Curated health knowledge bases and regulatory compliance |

While these initiatives provide a strong starting point, healthcare organizations require tailored solutions that go beyond generic safeguards. The regulatory landscape in healthcare is highly fluid, with evolving rules from bodies such as ONC, CMS, and HIPAA driving constant change. At the same time, patient safety demands strict adherence to accurate, reliable information while minimizing the risk of misinformation that could lead to misdiagnosis or treatment errors. Compounding these challenges are rapidly evolving misinformation campaigns that target LLMs through prompt injection, training data poisoning, and coordinated narrative flooding.

Brandywine Consulting Partners (BCP) recognizes these pressures and has developed defense in depth strategies and solutions that address risk, ensure compliance and patient protection, offering resilience against LLM manipulation. By combining real-time misinformation detection, robust retrieval governance, and continuous adversarial testing, BCP enables healthcare organizations to leverage AI confidently while safeguarding regulatory integrity.

Brandywine Consulting Partners’ LLM Defense in Depth

Brandywine Consulting Partners (BCP) has developed a defense-in-depth solution purpose-built for healthcare. Our approach combines retrieval governance, real-time misinformation filtering, and continuous auditing, delivering 30% higher accuracy compared to the top 10 baseline models.

Advanced Retrieval Governance (RAG++)

Curated Health Knowledge Bases: Use only vetted sources like NIH, FDA, WHO, peer-reviewed journals, and internal SOPs.

Freshness Filters: Apply time-based limits to ensure responses use the latest medical guidelines and literature.

Mandatory Citations: Every generated response must include verifiable DOIs or URLs.

Real-Time Misinformation Detection

NewsGuard Integration: Built-in detection of known false narratives through False Claim Fingerprints.

Proprietary Claim Segmentation Logic: Splits outputs into atomic claims, validates each against trusted health databases, and blocks or routes risky content for human review.

Continuous Adversarial Testing

Healthcare-Specific Canaries: Designed to expose prompt injection and jailbreak vulnerabilities (e.g., controlled substance dosage tests).

Poisoning Simulations: Introduce controlled data poisoning to stress-test detection systems and improve resilience.

Clinician Red-Teaming: Medical experts test rare diseases, polypharmacy cases, and other complex edge scenarios.

Governance and Transparency

ONC HTI-1 Compliance: Clear disclosure of algorithmic provenance, intended use, and limitations.

WHO-Aligned Risk Management: Rollback procedures, patient-facing transparency notices, and ethical oversight.

Comprehensive Audit Logs: All prompts, citations, and decisions are logged for full regulatory traceability.

Measured Results: 30% Accuracy Improvement

BCP benchmarked its enhanced system against leading LLMs, including GPT-4, Claude 3, and Med-PaLM 2.

Evaluation Setup:

5,000 test prompts covering medical QA, claims workflows, and emerging misinformation.

Scored on factuality, citation integrity, and guideline adherence.

Metric | Baseline Top 10 Average | BCP Enhanced LLM |

|---|---|---|

Factual Accuracy | 87% | 95% |

Citation Integrity | 62% | 91% |

False Claim Resistance | 65% | 92% |

Overall Improvement | – | +30% |

These results confirm that BCP’s layered defenses not only mitigate misinformation risks but also significantly increase reliability in real-world, adversarial environments.

Recommendations for Healthcare Organizations to Combat LLM Manipulation

Deploy Guardrails Immediately: Never allow unsupervised clinical decision-making by open-ended models.

Integrate Misinformation Databases: Use tools like NewsGuard to detect and neutralize false narratives in real time.

Simulate Attacks: Conduct continuous red-teaming to assess prompt-level and training-level vulnerabilities.

Demand Verified Sources: Require every clinical output to cite authoritative medical data.

Ensure Vendor Transparency: Verify compliance with ONC and WHO standards for algorithm provenance and governance.

Track Key Metrics: Monitor false claim rates, hallucinated references, and reviewer overrides per 1,000 outputs.

Conclusion

Manipulated LLMs represent a critical risk to patient safety, compliance, and organizational trust. As adversaries evolve, so must defense strategies.

Brandywine Consulting Partners’ comprehensive framework delivers real-time misinformation detection, secure knowledge retrieval, and continuous auditing, enabling healthcare organizations to achieve 30% greater accuracy than today’s top-performing models.

By transforming AI from a potential liability into a secure, compliant, and strategic asset, BCP empowers healthcare organizations to leverage innovative AI while safeguarding their patients and reputations.

LLM Polling

As healthcare organizations embrace Large Language Models (LLMs) like GPT-4, Claude 3, and Med-PaLM 2, the risk of misinformation and manipulation has become a pressing concern. Independent audits, including NewsGuard’s AI False Claims Monitor, found that top models repeated false health claims 35% of the time, underscoring the urgent need for stronger safeguards.

To capture the industry’s perspective, Brandywine Consulting Partners (BCP) has included the following poll to explore awareness, risk perception, and strategies for preventing AI-driven misinformation. Please be advised, the poll has been set to share results with individuals that participate.

Awareness of LLM Manipulation Risks

How concerned are you about the risk of misinformation and manipulation in healthcare AI systems?

Extremely Concerned: major risk to patient safety

Moderately Concerned: some risks, manageable with oversight

Slightly Concerned: only affects edge cases

Not Concerned: trust current safeguards

Macro Versus Micro LLM Manipulation Risk

Which type of LLM manipulation do you believe poses the greatest risk to healthcare?

Micro-Manipulation: prompt injections & jailbreaks

Macro-Manipulation: coordinated poisoned data training

Both are equally dangerous

Not sure

Accuracy and Reliability of Current Models

NewsGuard found the top 10 LLMs repeated false claims 35% of the time. How would you rate your confidence in current LLM-generated healthcare information?

0%High confidence – reliable with minimal oversight

0%Moderate confidence – needs human review

0%Low confidence – too error-prone for clinical workflows

0%No confidence – should not be used in clinical decisions

Steps to Reduce Misinformation

What do you think is the most effective step to reduce AI misinformation in healthcare?

Strict retrieval governance (verified sources only)

Real-time misinformation detection tools

Continuous red-teaming and adversarial testing

Regulatory enforcement and compliance audits

Adoption of Defensive Strategies

Has your organization implemented specific safeguards to prevent AI-driven misinformation?

Yes: comprehensive defenses are in place

Partially: some steps taken, but gaps remain

No: planning to address soon

No: no current plans

Brandywine Consulting Partners (BCP) will analyze and publish the results in a comprehensive report, sharing key insights with healthcare leaders, regulators, and technology partners. These findings will help shape best practices and guide future solutions to ensure LLMs are safe, compliant, and trustworthy tools for modern healthcare.

Comments